A Study in Chrome: The Ethics of Silverside [PAX East 2015]

I spent last weekend at PAX East, one of the biggest gaming conventions in the US: over the course of the weekend, around 70,000 nerds descended upon the Boston Convention and Expo Center (BCEC) to revel in a weekend of video-gaming, board-gaming, and over-all nerdiness. This PAX East was my fourth as an attendee, but my first as a “special guest”.

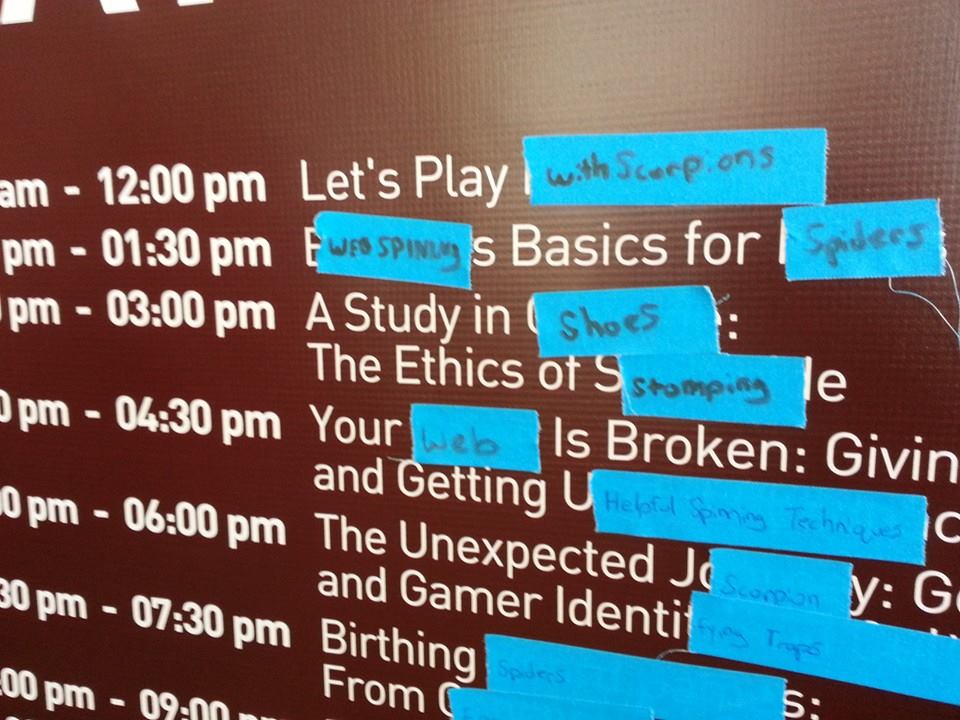

This year, I was designated a “special guest” because I organized and sat on a panel. Not on video games, but on robot ethics. Our panel was entitled “A Study in Chrome: The Ethics of Silverside”, and had the following description:

“Should robots be given guns? Should robots be used as sex workers? These are among the many interesting ethical questions raised by Penny Arcade’s “Automata: Silverside”. While “Automata” may be a work of fiction, robot warriors and robot sex workers are not; they are in use today, for better or worse. Join researchers from the field of Human-Robot Interaction for a conversation on robot ethics, and learn what today’s leading robot-ethicists have to say about the ethics of “Silverside”.

For those of you who aren’t familiar with it, Automata: Silverside is a short comic series written by the folks at Penny Arcade (the hosts of PAX, or “Penny Arcade eXpo”). It’s a Tech Noir comic about a pair of detectives: Sam, who’s human, and Carl, who’s a robot. While the comic is pretty short, it manages to touch on several issues that are of interest to those who study robot ethics.

The Idea

In our panel, my labmates Willie, Gordon, and I each talked about one such issue. I talked about whether robots should be allowed to wield guns autonomously, Willie talked about the ethics of robots and sex and relationships in general, and Gordon talked about the general moral status of robots.

<Disclaimer> While the three of us do research in the HRI Lab, our talk was given as private citizens, and we did not announce our shared affiliation during our talk; what we said in the panel constituted our own views, and doesn’t reflect the views of our employer or funding agencies. </Disclaimer>

For each issue, we first showed panels from the comic that illustrated how that issue is portrayed in Silverside, then discussed that issue with respect to the current state of the art in robotics, and finally discussed what what the experts in robot ethics have to say about that issue. I won’t go into the details of the talk in this post, since I’ve previously blogged about robots with guns, but I’ve embedded our slideshow below (note that you can access the speaker notes), as well as the videos of our presentation (which are unfortunately a bit hard to hear). If my labmates blog about the issues they chose, I’ll also add links to those. I will, however, go into details about our Q&A session, and describe the overall results of the panel, so keep on scrolling past those videos!

The Panel

I have to admit that I was a little nervous that only a handful of people would turn up for our panel. This was not the case. About an hour before it started, people started lining up, which I decided to ignore and refuse to talk about so as not to psych myself out. Cut to ten minutes before our panel, when the doors to our room (Arachnid Theater) opened, and the crowd filed in. The room wasn’t full, but we probably ended up with about 150 people. The first sign that things were going well was when, after our talk ended and our Q&A session began, pretty much nobody left (I’d been fearing a mass exodus. I worry, okay?). Second, the questions we got were great. Here are truncated and polished versions of those questions, with truncated and polished versions of our answers.

1) Stephen Hawking and others of high profile have recently raised fears regarding the singularity. Are these fears justified?

Given our experience working with robots, where it’s a challenge to get robots to drive through doors properly, we tend to view the possibility of some sort of apocalyptic event of this sort seems to be much more of science fiction than science, at least for the forseeable future.

While technology is certainly increasing at a rapid pace, its speed of advancement is limited by some real world factors such as product cycles and legislation, which tend to move very slowly. One example would be to look at the tablet. Tablets have been around for decades, but it wasn’t until Apple released the iPad that the technology was embraced by consumers. Another example would be driverless cars. Driverless cars have also technically been around for several decades now.

2) Would it be ethical to form a robot’s personality from information scraped from the internet about another person? Would this lead to the “copyrighting” of personalities?

To answer this question, you’d first need to decide what a “personality” actually is, a question which doesn’t yet have a universally agreed upon definition. One could envision, however, scraping all of one’s personal information from their interactions with a machine, and copyrighting or otherwise protecting that information. If you’re interested in this topic, we highly suggest you check out the show Black Mirror (currently available on Netflix!), in particular Season 2, Episode 1, which deals with some of the implications of this question: could you scrape all the information about a dead person and bring them back as a chatbot? Or as an android? What would be the ethical ramifications? I don’t want to give any spoilers, so all I’ll say is go watch the episode. Maybe I’ll do a blog post on it in the future!

3) Does the Human Rights Watch’s attempt to ban autonomous weapons come from a “false perspective” that such a ban could realistically be enforced?

This came up in the debate at AAAI. Goose argued that land mine and cluster munitions bans have proven very effective, in part because the bans have caused those weapons to become heavily stigmatized. And thus, even though creating a landmine is incredibly easy, their use is now mainly limited to a few rebel groups. Goose’s hope is that a ban on fully autonomous weapons systems would create a similar stigmatization.

4) Humans can subjectively discount strong evidence in the face of the unlikeliness of that evidence. Can machines do this, and if not, what are the implications for armed robots?

A lot of this comes down to how much you believe in the natural superiority of humans. Simple probabilistic systems are able to perform this type of evidence discounting, and there’s nothing in principle preventing robots from making subjective decisions that rely on even more nuanced social factors. That being said, a lot of work will actually be required to realize those capabilities.

5) If a human were to trade their limbs and organs one by one for prosthetics, and finally upload their mind, at what point (if any) would they cease to be human?

This goes back to a longstanding debate in the Philosophy of Mind and Philosophy of AI. Someone like Searle would say that unless you’re implementing this uploaded mind in human wetware, it’s not really alive or thinking. This also ties in to the “hard problem of consciousness”: David Chalmers basically says that there are lots of “easy” problems in Philosophy and Psychology relating to how various mental processes work, but that understanding our subjective experience of consciousness and establishing whether “someone is home” in the mind is a significantly harder class of problem. If you move the mind from wetware to hardware in such a way that it still operates in the same way, is it fair to say that “the lights are on but no one’s home”? This is a hard problem. It also presupposes that we even know what “consciousness” is, which it’s not clear that we do. Once again, it’s a monumentally hard problem.

6) Does using a vibrator constitute having sex with a robot?

Love and Sex with robots talks a lot about this, and where the line gets drawn. Perhaps a lot of it has to do with when one starts to form an emotional attachment to the machine, which indicates something completely different. If you have an emotional bond with your vibrator, then perhaps you should view it as having sex with a robot. However, this type of connection will grow increasingly likely as the machines we (as humans) use for sex gain more and more capabilities and improved behaviors.

7) What is the interplay between animal and robot ethics? As we develop a greater sense of animals as moral patients, how does this affect our view of robots as moral patients, and vice versa?

We can certainly take the criteria we use for establishing moral patiency for living creatures (i.e., animals) and apply them to robots. Also, animals that are more developed tend to display more human-like emotions, and much of our ethics towards animals may be guided by this, in that we don’t want to cause animals pain or suffering if they display emotional responses to such pain or suffering. Similarly, if a machine can feel pain or suffer, and produce a similar emotional response, we may be more reticent to cause pain and suffering to that machine.

Unfortunately I don’t have video of the last two questions so I don’t have the full record of how we responded

8) [This audience member essentially asked about the robot trolley problem.

We pointed this audience member towards Bertram Malle’s HRI paper].

9) As machine intelligence develops, we will have robots at differing levels of intelligence. What challenges will arise from this?

One challenge we noted was the legal challenge: how do you write legislation that accounts for robots with differing forms and levels of intelligence? Does it make sense to write legislation that is specific to robots, or should it be written with respect to artificial systems (embodied or otherwise) that have specific capabilities? Another very hard question.

The Aftermath

Overall, the results of the panel were inspiring. People seemed deeply engaged and eager to talk about these issues, with conversation spilling into the hallway for a good ten minutes after the panel ended. One of the audience members who kept the conversation going in the hall was a local English professor who excitedly told us that our panel had been his one must-see panel of the weekend. The highlight of my night, however, occured ten hours later, when, while riding the Boston subway home from the convention, I heard a couple talking about the difference between machine and human cognition. I approached them and cautiously asked if they’d been to our panel. It turned out that they had, and that throughout the day following the panel they had continued discussing the issues we’d raised. My heart sang.

Leave a Comment